How to Configure the RobotsTxt Module in Drupal?

Proper Robots.txt file configuration is a cornerstone of SEO optimization for every Drupal website. In Drupal, RobotsTxt can serve as an excellent tool for controlling web crawlers’ access, as it affects how your site appears in search engines. Let's explore how to efficiently create a robots.txt file in Drupal using the RobotsTxt module.

What is Drupal’s RobotsTxt module?

What is Drupal’s RobotsTxt module?

The RobotsTxt module is designed specifically for Drupal. It facilitates intuitive management of the robots.txt file – the essential element for SEO optimization. The robots.txt file directs search engine bots and informs them which pages or files to crawl and which to ignore. In Drupal, robots.txt management becomes much simpler and more effective with the dedicated module.

How to Install RobotsTxt Module in Drupal?

How to Install RobotsTxt Module in Drupal?

You can add this module to your Drupal site in two ways:

- Manual installation: Place the module file in your Drupal module directory.

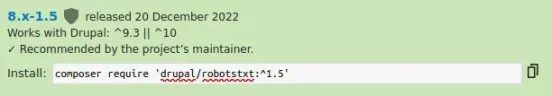

- Composer installation (recommended): In this case, you need to execute the command to download the RobotsTxt module and its dependencies. you can find all the necessary information in the module documentation.

1. Open the command line and enter: composer require 'drupal/robotstxt:^1.5'. The module will be downloaded in version 1.5 or higher, compatible with Drupal 9.3 or newer.

2. Activate the module in the administration panel by navigating to the Extensions tab (/admin/modules).

Alternatively, use the command: drush en robotstxt in the command line.

After completing the installation process, the module is ready to go. You can now use it.

Best Practices for Creating Your Drupal Robots.txt File

Best Practices for Creating Your Drupal Robots.txt File

Before editing your Drupal robots.txt file, familiarize yourself with its structure and syntax. Although it may seem straightforward, a misconfigured robots.txt can inadvertently harm your site's SEO.

Fundamentals of robots.txt

Correctly written instructions in a robots.txt file are organized in two parts. In the first one, you define which user-agents the instruction below applies to. Each requires you to specify the user-agent it refers to. In the second one, you provide instructions specifying which files, directories, or pages should be searched and which should not.

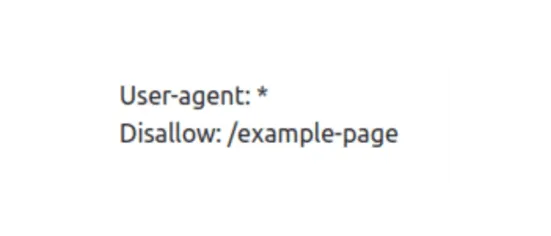

Rules are case-sensitive! For example, using:

will block the page /example-page for all search engines, but the page /Example-Page or /EXAMPLE-PAGE will not be affected by this rule. You can add comments using the # character. They will be ignored during processing.

Essential commands:

- user-agent – defines which bots the rules apply to.

- disallow – prevents bots from crawling specific resources or pages.

- allow – explicitly permits crawling, even within disallowed directories.

- sitemap – indicates the location of the sitemap (* – means any number of characters; the term is optional);

- $ – indicates the end of the line.

You can also use the $ character to block a page that has a fixed extension. The “disallow: /* .doc$” instruction will block URLs that have the .doc extension. You can do the same with other files, e.g. using disallow: /*.jpg$.

Usage examples

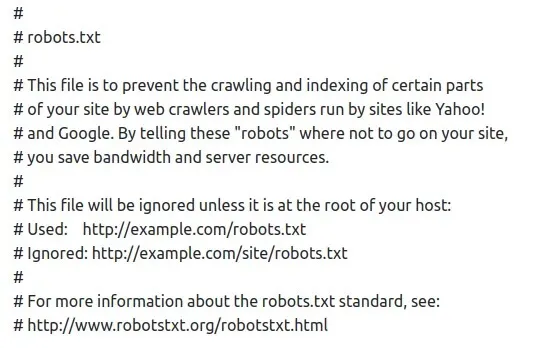

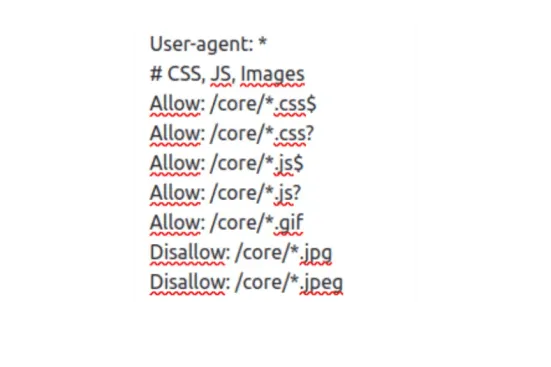

Here is an example robots.txt file:

In the example above:

- User-agent: * signifies that the instructions in the following lines apply to all crawlers.

- Allow: /core/*.css$ indicates that crawlers are allowed to look for CSS files located in the "core" directory of the site. The * means that the file can have any name before the ".css" extension, and the "$" indicates that the file name must end with ".css".

- Disallow: /core/*.jpeg means that no crawlers should look for files with the jpeg extension located in the "core" directory of the site.

How to Configure the RobotsTxt Module Correctly?

How to Configure the RobotsTxt Module Correctly?

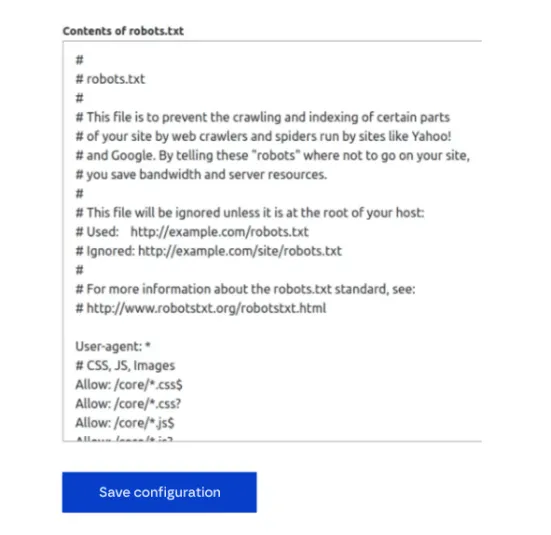

To configure your Drupal robots.txt file using the RobotsTxt module:

1. Navigate to admin/config/search/robotstxt or find the configuration under Drupal’s admin menu.

2. In the contents of robots.txt section, enter your specific directives based on your SEO and indexing needs.

3. After adding all necessary commands, click save configuration.

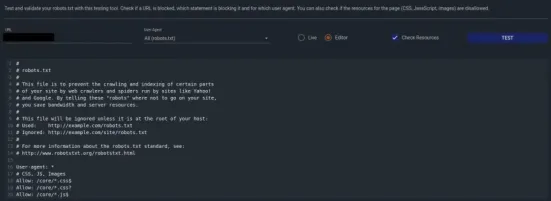

After saving the file, we recommend testing it. You can use tools available online, e.g. technicalseo (SEO TOOLS → robots.txt Tester).

Source: technicalseo.com

Keep in mind that the proper robots.txt file configuration requires familiarizing yourself with the specifics of the website, as well as understanding its business goals and the user needs. Improper settings can result in the accidental exclusion of important content from indexing, adversely affecting the website’s visibitlity in search results.

To ensure the RobotsTxt module works properly, you should either delete the default Drupal-generated robots.txt file or back it up with a different name. This way, the module will substitute its own robot.txt file, and the one generated by default during the Drupal application creation process will be preserved.

Benefits of Using the RobotsTxt Module

Benefits of Using the RobotsTxt Module

Here are the most important benefits of implementing this solution on your website:

- Convenient creation and updating of the robots.txt file without having to modify the source code.

- Improved visibility in search results.

- Clear user interface.

- Help in controlling the indexing of images, videos, and other multimedia elements that do not affect the position of your website in search results.

- Supervision of how robots browse your website. Failure to use this file may lead to excessive consumption of the so-called "indexing budget", i.e. the limit of the number of visits by internet bots to your website in a certain period. Too many indexed pages can be harmful to your SEO, as it may result in more important pages being pushed to the background or ignored by the search engine.

- Restrictions on access to certain content or pages that should not necessarily be visible to everyone.

Remote Robots.txt File Limitations

Remote Robots.txt File Limitations

Unfortunately, like any tool, robots.txt is not perfect. Thus, it has its limitations. Namely:

- No standardization – the file is based on the rules established by the Robots Exclusion Standard protocol, which is not standardized between different indexing robots. Although most known robots typically adhere to the rules outlined in the robots.txt file, each may interpret them slightly differently. Using the correct syntax is essential to prevent robots from misunderstanding your commands;

- Limited blocking options – the robots.txt file will not always completely disable access to given resources. Some bots may still try to visit blocked pages.

Summary

Summary

The RobotsTxt module offers intuitive and fast robots.txt file configuration – You can effectively adapt the functioning of the robots.txt file to your website’s needs by using the possibilities of integration with other modules and defining custom rules. In addition, the simple interface and flexibility in managing instructions only confirm the belief that the module is a great solution for every owner of a Drupal-based website.

If you have problems configuring your robots.txt file, don't hesitate to contact Drupal development experts.